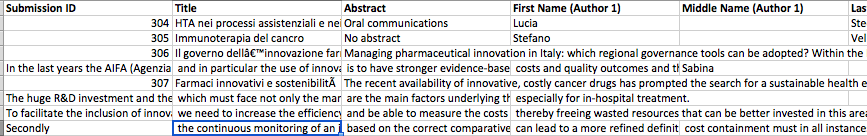

I have tested it and I get a very garbled, imported xls table.

Hmm, those spreadsheets look to me like maybe they need to be imported or opened with a specific delimiter, and that might be why they are looking garbled in your instance. @pmangahis have you encountered this before? Do you know if there are any recommendations for making sure the columns show up properly when a report is opened?

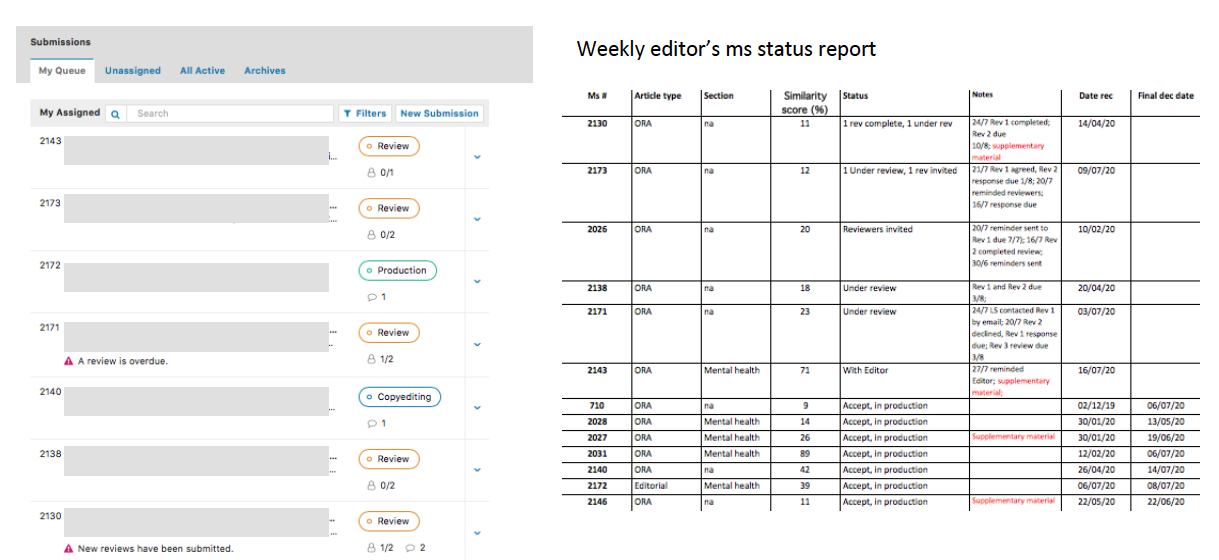

here is a comparative view of the submission tab in OJS and my report (anonymised)

Thanks for that! This looks like it aligns with a lot of the feedback we’ve gotten from focus groups that we’ve run at our community sprints. Editors want more insight into what’s going on within a stage – particularly the review stage – and how long a submission has sat with editors/reviewers.

We’ve collected a number of these requests in a project on Github. I think the most relevant to your needs is going to be work to improve visualisation of review stage status. If you see any proposals in there that you particularly like, let us know!

We don’t have this work scheduled at the moment but we know it’s a priority and we want to get to it as soon as possible.

you cannot sort submissions by status

This is also a community priority.

There is no alert as to Response late , for example (while there is for A review is overdue )

This alert should appear whether the reviewer has missed a response due date or the review due date. I think I’ve heard before that this should be distinguished better, so that’s confirmation of that.

as a journal manager, you have no visibility in the submission table of the editor assigned

The next major version (3.3) will introduce a filter for journal managers to view submissions by assigned editor.

when you open a submission detail, you lose the ms number

This is addressed in v3.2.

you can notify a participant but assign a file,

I think we’ve had this request before but I couldn’t find it filed so I created a feature request.